Today is as good a day as any—perhaps better than most—for a reality check, a moment to reckon with the unsettling fragility of what we presume to know. Ours is a world propelled by information, a restless engine driving decisions both grand and granular, from the lofty chambers of governments to the intimate spaces of family life. But consider this: the society we now inhabit, awash in torrents of data, has done something profound. It has traded literature—the rich tapestry of human insight and reflection—for the sterile calculus of information. And in doing so, it has forged a curious identity, one that charts its course not by wisdom, but by algorithms, not by art, but by metrics. But regarding what we think about the reliability of the information we receive from online systems, we have just now found something different, and disturbing, emerging from OpenAI, artificial intelligence research organization and technology company that produces ChatGPT, the popular artificial intelligence (AI) system designed to generate human-like text for conversation, creative writing, coding, and more

First, some context for the the news to be shared. We should consider how our notions of knowledge in the Western world have developed.

We all remember when information was seen as the accumulation of expertise over time and with extended research, and perhaps we in the Western world owe this “knowledge ethos” more to our Greek ancestors than any other historical source, since it was in Athens that the true hunger for rhetoric, rationality, logic, and public discourse of modernity were born. At that time, knowledge wasn’t so much defined as having encyclopedic command of facts as it is today; rather, the ability to reason toward truth was what really characterized Greek philosophy, inquiry, and democracy.

Indeed, in Homer’s time, facts were not considered the primary form of knowledge, because people could lie, facts could be mis-remembered, the reporting facts could vary across a range of witnesses, each of whom might have seen a different perspective of what happened. Indeed, in the land where modern democracy was born, facts were dismissed with the incontinent rattling of a child clamoring to be believed. But sense, argument, logic — these are universal, and once applied to whatever is known of any available facts, logic proves to be timeless and rigorous.

This belief in the logic of knowledge was also central for Socrates, the foundational figure of Western philosophy, whose contributions to epistemology (the study of knowledge) were grounded in his distinctive method of inquiry, his skepticism of certain claims to knowledge, and his emphasis on self-awareness and moral truth. Using logic in reverse (yet maintaining its truth value), Socrates pioneered the dialectical approach to knowledge often referred to as the “Socratic Method” which involved engaging in dialogue to examine and deconstruct beliefs through questioning. Socrates exposed contradictions in people’s reasoning and challenged them to refine their understanding. Emphasizing that true knowledge requires justification and coherence rather than merely opinion or tradition, his approach shifted the focus from memorized information to critical thinking and self-examination.

We have lost this feeling for logic, but over several thousand years, the ability to reason has remained important, even though it took some detours before becoming what we consider “logical, sensible knowledge” today. But how did logic lose its status as the arbiter of truth?

In the Dark Ages, reasoning was based on beliefs often derived as much from esoteric sources as from whimsical bias. Repulsion to the shape of someone’s head, for example, could be sufficient “reason” for rejecting, perhaps condemning, such a person. A similar belief, that the weight of one’s heart indicated its purity or impurity, is evident in many descriptions of the time, as in the Book of Hours, a 15th Century text written in the West Flemish (now Belgian) city of Bruges (whose literal meaning is Witches), where Jesus is shown floating over the partially sinking bodies of men, presumably “brought down” by the weight of their sins.

Eventually, as such “logic” showed that prejudices invaded and infected religious thought, it seemed acceptable to incarcerate, torture, and murder anyone who may have fit certain capricious criteria. Perhaps the most memorable parody of this obsession with superstition-as-logic is memorialized in what may be the most ridiculous “truth-seeking” scene ever filmed, in the classic comedy, Monty Python and the Holy Grail:

The Inquisition spanned centuries and took place in different regions, including the Medieval Inquisition (12th century), Spanish Inquisition (1478–1834), Roman Inquisition (1542–1859), and others, where, all told, up to 100,000 people were killed for religious reasons. Many trials were no doubt guided by the kind of fallacious reasoning portrayed in this film scene.

If ever there was a reason to rethink reason, this comedic scene epitomizes it, for such craziness was only too real in Europe, and justified the emergence of humanism as a reaction to superstitious “reasoning,” rigorously avoided all forms of “subjective” or “spiritual” reasoning. Books were now the weapon of unstoppable transformation; learning was the playbook for modernity; and universities, not abbeys or convents, rose to become the great, authoritative guilds and bastions of real reason.

A new love of knowledge marked this period, known as the Enlightenment, which began in 1650. Its first phase, the “Scientific Revolution,” and thinkers like René Descartes, Isaac Newton, John Locke, and Baruch Spinoza laid the groundwork for Enlightenment ideas. Starting in 1715, the Enlightenment reached its intellectual and cultural peak during this period with contributions in political theory (e.g., social contract theory), critiques of organized religion, economic liberalism, and encyclopedism - directions pioneered by Voltaire, Jean-Jacques Rousseau, Montesquieu, Denis Diderot, David Hume, Adam Smith, and Immanuel Kant.

This second phase coincided with the French Revolution and the early Industrial Revolution, and the Enlightenment now came to represent the scientific method for understanding the natural world; individual natural rights, freedom of speech, and personal autonomy; secularism, a move away from the dominance of religious institutions in intellectual and political life; a deeper skepticism of authority that led to the questioning of monarchy, aristocracy, and religious dogma; and an overall spirit of progress and optimism, and the belief that human society could improve through reason and science.

In this way, research replaced prayer as the way of inner growth, especially in areas with growing populations. Out of the Middle Ages, this new Romantic vision for Europe was forged on the influence of Amsterdam, Venice, Paris, and London and other cities that were largely functional because they became secularized, now focusing on commerce rather than pious subservience to the Vatican or the Holy Roman Empire. By contrast, more religiously traditional cities fell behind; Rome, Seville, Madrid, and Vienna in particular remained tied to a more orthodox path. Rome remained a deeply religious city as the seat of the Catholic Church, and while it enjoyed a certain level of wealth due to papal patronage, its economy was less commercially diverse. The city’s economic base remained tied to ecclesiastical revenues and the papal bureaucracy and embraced trade or industry only cautiously. Similarly, Seville remained an emphatically religious city, with the Catholic Church wielding significant influence, and so its economy became heavily dependent on Church-related activities. While Seville did experience economic prosperity during the early 16th century as a gateway for New World trade, its wealth had declined noticeably by the 17th century. Lisbon, too, retained its deeply religious character, and the influence of the Inquisition stifled intellectual and economic innovation. For its own part, and as the capital of Catholic Spain, Madrid remained a religious stronghold and was politically significant but did not experience the same level of economic growth as cities like Amsterdam or London. It is certain that Spain’s rigid adherence to Catholicism, enforced by the Inquisition, discouraged economic dynamism and intellectual freedom. Similarly, Vienna, which had served as the capital of the Habsburg Empire, remained an intensely Catholic city, influenced by the Counter-Reformation. Vienna retained great political and cultural importance, but its economy was not as diversified or robust as those of more secular cities.

Living as we do in a hyper-contemporary society, it is easy today to forget the history and impact of knowledge and reason on the lives of millions of Europeans over five centuries. Developments in the Middle East, on the other hand, were less intellectualized, and this brought sobering consequences for the Arab world, as we read in books like “What Went Wrong?: The Clash Between Islam and Modernity in the Middle East” by Bernard Lewis.

In What Went Wrong?, Lewis investigates the decline of Muslim-majority societies relative to the Western world, asking why the Islamic world, which was once a center of scientific and cultural advancement, began to lag behind Europe in terms of technological and economic development. Lewis examines the rise of Western influence, colonialism, and the failures of reform movements in the Middle East, exploring how political, social, and religious factors contributed to a resistance to modernization.

All of this historical context is recalled to bring a double change into focus: firstly, that modernity has given us the promise of research, which aims to find facts as the direct path to reality, and research itself evolved from the modern version of the Greek approach, reason, which emphasizes ideas as the means to truth. Secondly, modernity has evolved into the hyper-contemporary, or the postmodern condition, which has itself replaced research with anything that takes less time and effort to produce what seem to be similar results.

It might be impossible to deny that the emergence of digital media after 1999 became the greatest catalyst transforming all contemporary societies, especially in the West. At first, in the late 1980s and early 1990s, digital media’s role seemed very promising; there was a sense of greater communication and community, people in geographically disparate points in the globe could now come together within what Howard Rheingold’s 1993 book celebrated as The Virtual Community. pioneering exploration of online communities and their impact on society, communication, and human relationships.

Rheingold drew on his experiences within early online networks like The WELL (Whole Earth 'Lectronic Link), one of the first large virtual communities, to examine how people interacted in virtual spaces, formed communities, and built relationships in the absence of physical proximity. The book conveys the excitement and potential he saw in these emerging digital networks, which he believed could foster collaborative learning, mutual support, and even activism. For Rheingold, virtual communities allowed people to create new social norms, to share information, and to support one another, all as a new form of “homesteading” on a new electronic frontier. Rheingold saw the internet as a force able to reduce geographical barriers, enabling people with shared interests and values to connect in ways that were previously impossible. The Virtual Community presented both a hopeful and cautionary view of how the internet could transform human social dynamics, as Rheingold also warned of potential drawbacks from misinformation, the digital divide, and the risks of losing privacy in online interactions. But all of this was before social media, which lowered the barrier to digital participation by bringing many people together in pithy dialogue bites at the click of a button. It wasn’t long before governments and radical groups weaponized social media to the degree that a Presidential election in 2016 could be threatened by many thousands of web bots pumping disinformation through thousands of fake accounts on Twitter and other digital platforms. By then, what became hashtag culture had lost its history, its background, and its bottom.

What this new culture didn’t lose was its appetite for instant feedback, for, never again was the extended attention span of diligent research to return with the utopian promise it had offered barely ten years earlier. No one, it seems, wanted any longer to embark on the journey of knowledge discovery; all that mattered was the instant synopsis on screen. Encyclopedias of all kinds became dinosaurs; Collier’s Encyclopedia's last print edition was in 1997; Encyclopedia Americana died in 2006; Compton’s Encyclopedia in 2008; after 244 years in physical form, the last print edition of the Encyclopaedia Britannica was published in 2010; The World Book Encyclopedia, 2023. As well, online research engines also shuttered. The Infoseek website went offline early on in 2001; MSN Encarta and also Groxis (Groovefinder) in 2009; Ask Jeeves (Ask.com) in 2010; AlltheWeb in 2011; MagPortal in 2012; AltaVista closed down in 2013; once a popular directory for organizing websites by category, Yahoo Directory shut down in 2014; and Scholarpedia went offline in 2017. These were not entirely replaced by Google, as they were not merely search engines.

Grokker.com, for example, created a visual search engine that represented search results in a "concept map;" MagPortal indexed online magazine articles; Scholarpedia was a peer-reviewed, expert-authored encyclopedia that aimed to provide reliable information on scientific topics (it’s still online but not actively maintained).

As we can see from these examples, what is lost in search engines that emerged after these conceptual systems is the world presented through a web of contextual connections between meaningfully related categories. Instead, we are now, and for several decades have been, reduced to searching within single text field without any context or connection to learn from:

Today, the evolution of the research paradigm has happened entirely on the back-end; the front end, the interface, still has no conceptual demands or offerings:

And so we reach the pinnacle of cognitive convenience, or laziness. With AI, there is now little need to do more than simply ask, deprived of the experience of gradual learning by moving through organized content. much as one would in browsing through a bookstore or library. As with regular search engines, but much richer formatting and understanding of our questions, AI procures all of the knowledge benefit with none of the research work. Having gone beyond research, and even beyond prayer, all we are asked to do now, ironically, is to believe that the response produced is true, because an artificial intelligence has produced it.

For any area of curiosity, all labor has been eliminated, no need to even think about how to ask the question.

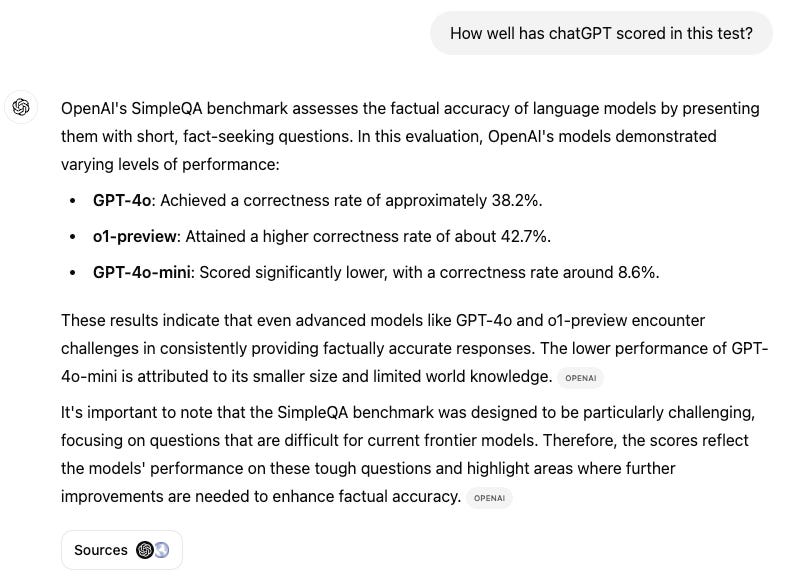

And so, it is obviously concerning to learn that AI systems, deriving almost 100% of their inference content from the web, are producing statements that are factually correct less than half the time. I’m referring to a test designed by OpenAI named SimpleQA that measures the factual accuracy of Large Language Models (LLMs), and was thus intended as a way to test the credibility of statements produced by any AI system. Think of Large Language Models (LLMs) as supermassive archives of related data scraped from many text sources, and used as the basis for what an artificial intelligence system “knows.” To test the correctness of such an AI system, a test like simpleQA is not difficult to imagine: ask the AI a set of questions which can only have one correct answer that are known ahead of time. And so, SimpleQA can ask an AI over 4,000 questions related to science, politics, and art, and the answer is checked against the known correct response. The ratio of correct answers to total answers determines the factual correctness of the AI system being questioned. When SimpleQA was applied to OpenAI’s ChatGPT, how did that popular AI do?

OpenAI has several AI models; its best is known as “GPT-o,” and when interrogated by SimpleQA, GPT-o scored surprisingly poorly: only 42.7% of its answers were correct. OpenAI’s most often-used version, GPT-4o, was correct only 38% of the time. Indeed, chatGPT itself admits to as much:

As the old programming adage “Garbage In, Garbage Out” makes clear, any system’s output is only as accurate as the data that it uses. And it is with this fact in mind that OpenAI engineers were disturbed to find that SimpleQA showed great inaccuracies in the ChatGPT model. Worse still, they found that all AI models overestimate their own capabilities, giving inflated scores about their answer accuracy. This seems a curiously human attitude.

Perhaps “fake AI” will become a the new moniker for our self-deluding times marked by total absence of labor devoted to research and truth-seeking. Socrates argued that knowledge and virtue are intrinsically connected; he believed that true knowledge leads to right action because no one willingly does wrong if they truly understand what is good. In this sense, now also lost to us, correct action - the problem of ethics - is all that matters. In the end, we may find that we never needed artificial intelligence to live an authentic life, for knowing what is just, good, or virtuous is more important than technical or superficial knowledge. The highest form of any truth is that which brings itself, and us, to the service of our brethren, and this knowledge is already within us.